Cracking the 'Why': How We Rethought Product Performance Monitoring in a Scaling Startup

05 May, 2025

|

5 Min Read

Read Blog

Table of Contents

A thread is a fundamental unit of execution within a program, representing a single, sequential flow of instructions

The market offers numerous Application Performance Monitoring (APM) tools for API server monitoring. While these solutions excel at showing basic metrics like API performance, memory usage, and CPU utilization, they fall short in providing crucial insights into what's happening under the hood.

The Critical Gap: Traditional APMs don't reveal what threads are actually doing or where they're getting stuck. This lack of deep visibility turns scaling into a costly guessing game.

We encountered this problem firsthand when our Python threads became stuck in GIL (Global Interpreter Lock) contention. Our APM dashboard showed high resource usage, but couldn't tell us why. Without understanding the root cause, we made the classic mistake:

The result? Significantly higher infrastructure costs with minimal performance improvement.

At Clickpost, we believe in going beyond surface-level monitoring. Our goal is to:

This article explains how we developed our in-house thread profiling system that provides deep application insights without impacting performance. You'll learn how we moved from reactive scaling to proactive optimization through better visibility into our application's behavior.

Like many development teams, we've been scaling our systems for years. But when it came to managing threads in our Python and Java servers, we were essentially playing a guessing game.

Traditional approaches focused on monitoring fundamental system resources: CPU usage, memory consumption, disk space, and network bandwidth. Tools like top, htop, vmstat, and iostat provided insights into server performance, with administrators setting up alerts when thresholds were exceeded.

Monitor CPU usage

If CPU usage was high, reduce threads

If CPU usage was low, increase threads

Repeat until things seemed "okay"

But this approach had serious problems:

The CPU wasn't our only bottleneck. We discovered several hidden performance killers:

How busy are our threads actually?

How many requests are waiting in queue for available threads?

How much time do threads spend waiting for locks?

How much time do threads spend waiting for I/O operations?

The catch? We needed answers without modifying our application code or impacting performance.

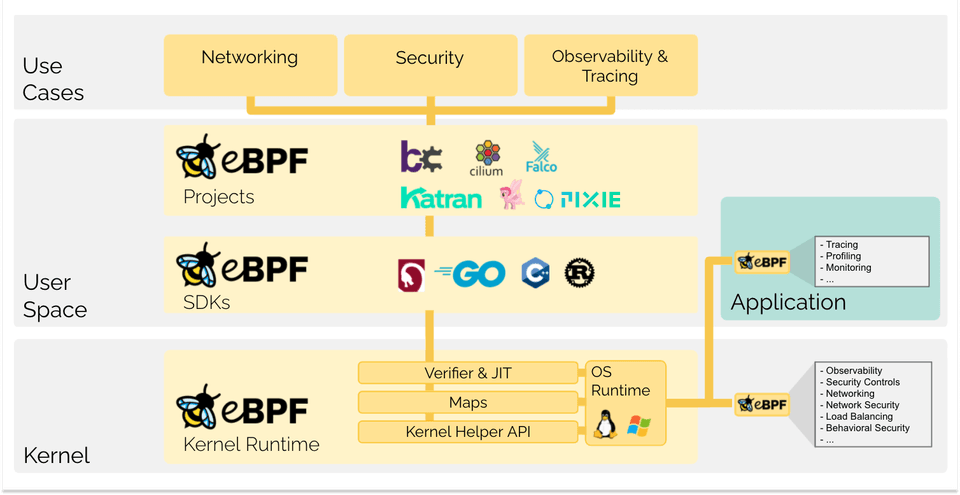

After exploring various options, we discovered eBPF (Extended Berkeley Packet Filter) - a powerful Linux technology that lets us peek inside our system's kernel without breaking anything.

Image source

Think of eBPF as a way to insert tiny "spies" into your operating system. These spies can watch what's happening and report back without slowing things down.

Originally designed for monitoring network packets, eBPF has evolved into a Swiss Army knife for system monitoring. It lets you:

Real-world analogy: Imagine being able to place invisible cameras at every door in a busy office building to see who goes where, how long they spend in each room, and where bottlenecks occur - all without anyone knowing the cameras are there.

Our Investigation Process

Before we could build our solution, we needed to understand exactly how our servers handle requests. We used Linux's `strace` command to spy on our Python and Java server system calls.

What We Discovered: The Life of a Web Request

Every web request follows a predictable pattern at the system level:

The names of a system call can vary depending on your system.

Key insight: Each connection gets a unique identifier called a "file descriptor" (fd). This became the foundation of our monitoring system.

Additional Complexity

Real servers are more complex than this simple flow. Python servers also use:

But we kept our focus on the core pattern to avoid overcomplicating our solution.

The Monitoring Strategy

We identified the key functions to monitor:

1. For thread synchronization:

2. For I/O wait tracking:

3. For request tracking:

Our eBPF programs calculate six critical metrics:

1. Request Latency - How long each request takes from start to finish?

How it works: When a connection is accepted (accept system call) , we store its file descriptor and timestamp. When the connection is closed (shutdown system call), we calculate the difference.

2. Number of Queued Requests - How many requests are waiting for available threads?

How it works: We track file descriptors that have been accepted but haven't been read by ‘read’ system call yet. These represent requests waiting in the queue.

3. Thread Utilization - What percentage of our threads are actually doing work?

How it works: Every time a thread reads (read system call) a request, we record its ID. Every 10 seconds, we analyze which threads were active.

4. Lock Latency - How much time threads spend waiting for locks.

4. Lock Latency - How much time threads spend waiting for locks.

How it works: We measure the time between entering and exiting `pthread_mutex_lock` functions.

5. I/O Wait Per Thread - How much time threads spend waiting for disk or network operations.

How it works: We measure time spent in context switches using the `finish_task_switch` function.

6. Total Request Count - Overall request volume for capacity planning.

The Technical Implementation

The Foundation: File Descriptors

In Linux, "everything is a file" - including network connections. Each connection gets a unique file descriptor (fd) within a process. This fd becomes our tracking key.

read() system call → Mark thread as active, track processing start

write() system call → Track response sending

Smart Data Collection

eBPF provides built-in functions that make monitoring easier:

Our eBPF-based monitoring revealed several eye-opening insights:

Armed with real data, we made targeted improvements:

Performance Improvements

Non-Intrusive Monitoring - Unlike application-level profiling tools, eBPF monitoring doesn't require code changes or performance trade-offs.

Kernel-Level Accuracy - By monitoring at the kernel level, we see exactly what the operating system sees - no sampling or estimation.

Language Agnostic - Our solution works for Python, Java, Go, or any language that uses standard system calls.

Production Safe - eBPF programs are verified by the kernel before execution, preventing crashes or security issues.

Open Source Contribution - GitHub: https://github.com/clickpost-ai/thread_profiling/tree/main

CPU usage alone is a poor indicator of server performance

Thread management is more complex than it appears on the surface

Kernel-level monitoring provides insights that application-level tools miss

eBPF is a powerful tool for understanding system behavior without performance penalties

Data-driven scaling decisions beat guesswork every time